Now on DEIXIS online: Powering Down

If supercomputing is to reach its next milestone – exascale, or the capacity to perform at least 1 quintillion (1018) scientific calculations per second – it has to tackle the power problem.

“Energy consumption is expensive, and usually the cost of maintaining such a large-scale system is as expensive as its price,” says Shuaiwen Leon Song, a Pacific Northwest National Laboratory staff research scientist. Already the average electricity used by each of today’s five fastest high-performance computing (HPC) systems equals that consumed by a city of 20,000 people, a paper Song coauthored notes. Such high power also could cause high temperatures that contribute to system failures and shorten equipment lifespan, Song adds. “Therefore, saving large amounts of energy will be very necessary as long as performance is not notably affected.”

The paper, presented at a conference earlier this year, offers a counterintuitive tactic to reach that goal: essentially turning down the power supplied to each of the myriad processors comprising an exascale system.

The technique, called undervolting, lowers on-chip voltages below currently permissible norms even as the number of power-hungry processors soars. Undervolting has risks, as malnourished transistors may be more likely to fail. But the team of six researchers says it has found a testable way to keep supercomputers running with acceptable performance by using resilient software.

The authors used modeling plus experiments and performance analysis on a commercial HPC cluster to evaluate “the interplay between energy efficiency and resilience” for HPC systems, the paper says. Their combination of undervolting and mainstream software resilience techniques yielded energy savings of up to 12.1 percent, early tests found.

Computers normally work within the limits of dynamic voltage and frequency scaling (DVFS), Song says. DVFS operates processors at voltages that are paired with specific frequency levels – the clock speeds at which processors run. If a voltage is less than what a given frequency requires or drops below the prescribed DVFS range, the most sensitive transistors can produce errors. That’s why “most commercial machines do not give users the option to conduct undervolting,” Song says.

Some are soft errors, he says – common transient faults such as memory bit flips that computers can correct on the fly. Hard errors, in contrast, often stop processors or crash the entire system.

Soft-errors undervolting can be handled with mainstream software-level fault tolerance techniques, the study suggests. Meanwhile, under the team’s proposed techniques, operating frequencies can remain fixed and maintain computational throughput rather than varying with voltages.

Read more at DEIXIS: Computational Science at the National Laboratories, the online companion to the DOE CSGF’s annual print journal.

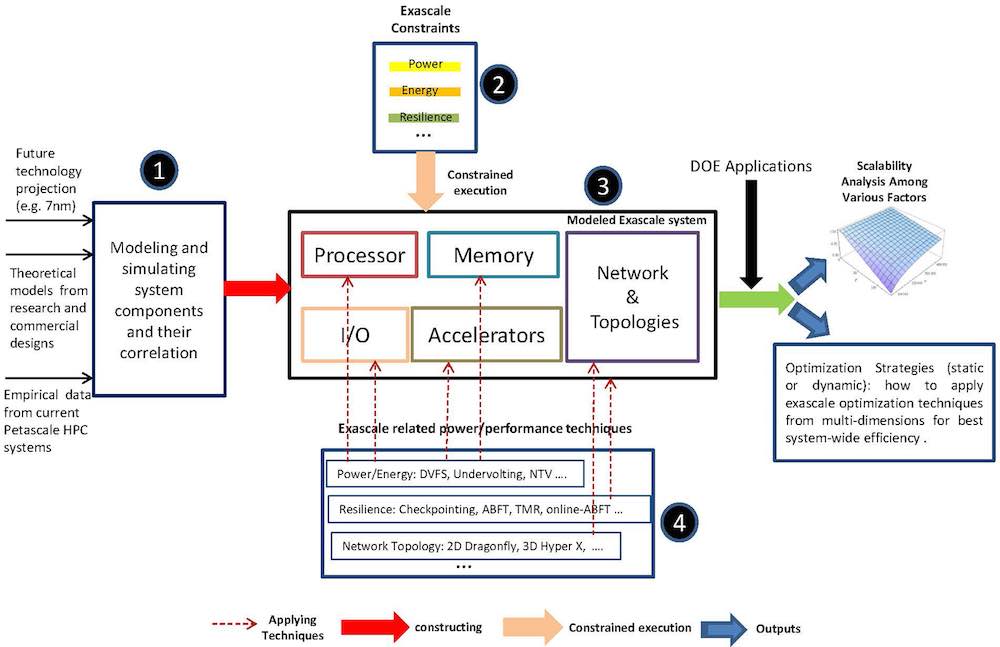

Image caption: The constraints at scale for building exascale architectures. The undervolting component (shown in 4) is part of ongoing work that Pacific Northwest National Laboratories’ (PNNL) HPC group and its collaborators are conducting in the effort to build energy efficient HPC systems. Credit: PNNL.